Bluecore enables enterprise retailers to send targeted email campaigns based ML/AI models. The experimentation feature—critical for optimizing conversion and ROI—was underperforming despite being fully functional. Engagement was low, adoption inconsistent, and experimentation results often invalid.

Marketers weren’t creating experiments because the workflow was fragmented, unclear, and buried within campaign creation workflow. The design problem wasn’t aesthetic—it was systemic. Our goal wasn’t to “redesign a feature,” but to diagnose and repair the user journey that surrounded it.

I coached the design team to look beyond surface usability and think about the product ecosystem—where friction originates before and after a feature is touched. Instead of jumping to solutions, we dissected analytics, shadowed customers, and mapped where the experience broke across campaign planning, execution, and measurement.

This approach helped the team build product empathy, align with PMs and data scientists on behavioral metrics, and develop a repeatable model for evidence-based design intervention.

Through analytics and customer interviews, we uncovered four root issues:

The time between an experiment launch and achieving a statistically significant result may be several weeks. With tens or sometimes hundreds of ongoing campaigns, it’s difficult for a user to remember what experiments are live and where they are.

Our solution was a unified dashboard for all experiments, giving visibility into live status, ownership, and outcomes—reducing cognitive load and increasing accountability.

In the UI, there was no clear indication when an experiment reaches a statisticaly significant result. Tests can run indefinitely.

We introduced automatic completion alerts and surfaced experiment metrics directly within campaigns, replacing hidden reports with contextual insights.

Changing an email campaign while an experiment is running impacts the validity of the data and resets the test. This is not explicitly communicated and led to many invalid experiments.

We recommended adding clear system warnings and workflow checks when editing live experiments to, dramatically reduce user's invalidating tests.

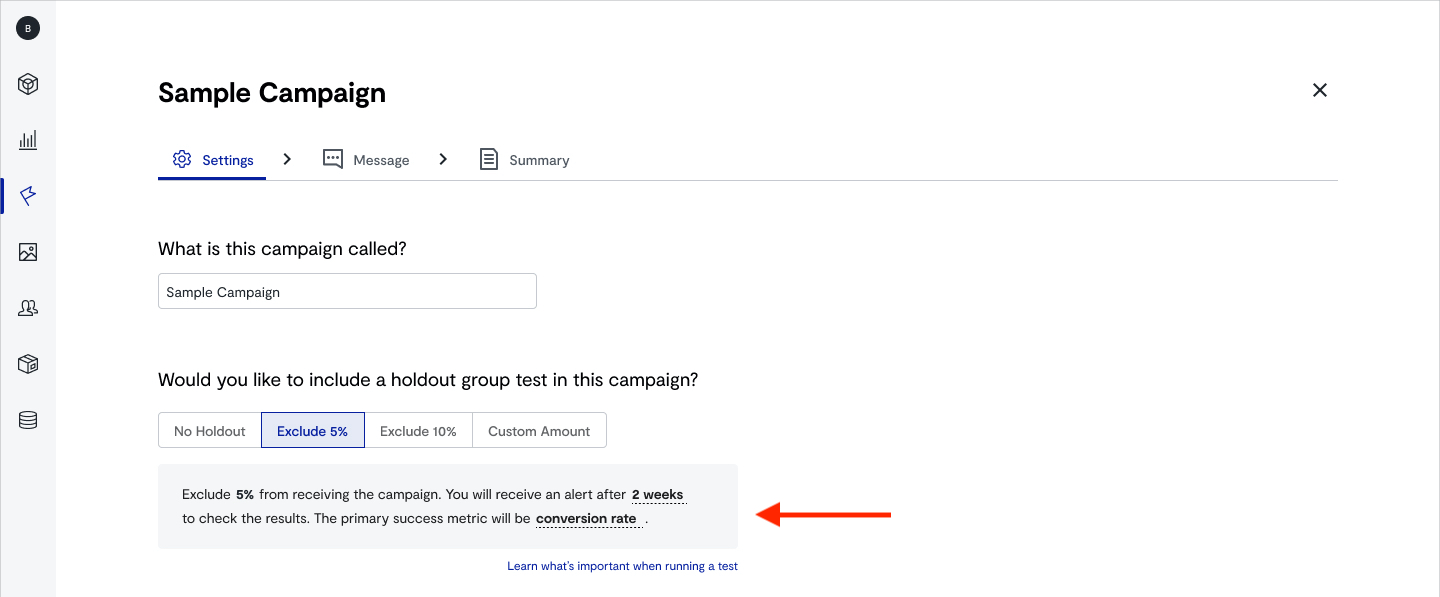

Experimentation is complex, and with full-featured campaign functionality, it was not clear to users which options in aggregate would led towards sucess.

To simplify, we consolidated experiment configuration into a single flow with natural-language feedback, helping marketers understand the impact of their choices.

These changes aimed to improve visibility, reduce user error, and increase experiment completion rates. More importantly, they will further solidify a scalable model for behavioral-led design decisions at Bluecore.

This project was less about pixels and more about product maturity—helping the organization see design as a diagnostic discipline that drives business outcomes. It reinforced my leadership belief that great product design isn’t just about improving interfaces—it’s about improving understanding, confidence, and decision-making across teams